How Cube’s Universal Semantic Layer Unlocks a New Generation of AI Apps

Cube is the standard for providing semantic consistency to LLMs, and we are investing in a new $25M financing after leading the seed round in 2020.

We break down the areas where startups utilizing generative AI have the biggest advantage.

Over the past 50 years, the tech industry has been built on two complementary phenomena. One is its ability to take “intelligence” from scarcity to abundance. Thanks to more powerful processors (Moore’s Law) and inexpensive storage, large rooms of people adding up numbers have been shrunk down to a single formula in Excel. The other major trend has been the improvement in user interfaces, as we’ve moved from punch cards to command lines to GUIs to touch screens. This has made “abundant intelligence” more accessible and easier for people to use.

Both these trends converged with ChatGPT in November 2022. Large language models like GPT-3 and -4 bring complex tasks that were previously beyond technology within reach. The form factor, a friendly chatbot, resonates with consumers who’ve been trained by Siri and Google Assistant to ask questions. The impact has been immediate and dramatic. Schools can no longer tell the difference between original work and the output of skillful prompts. Companies fear employees inadvertently fine-tuning models on confidential information. Governments are talking about regulation. Everyone is certain that generative artificial intelligence will transform society, but no one knows exactly how or what to do about it.

That is the opportunity for a new generation of entrepreneurs and why the BCV team is so excited about the coming age of AI. In the past year, we’ve seen three breakout products race past $100 million in revenue from millions of users: ChatGPT, GitHub Copilot and Midjourney. Many others hold the promise to do the same. In some ways, these companies look unlike anything we’ve seen before, with huge compute and infrastructure needs. In others, they look strikingly familiar, with opinionated founders, clear visions and awe-inspiring execution.

Many people look to mobile and cloud as analogs for what the next few years will bring. We believe this is misleading. Mobile and cloud were new distribution channels that favored upstarts over incumbents, whereas AI is a new foundational technology. In that way, it’s more akin to the microprocessor than the iPhone. The distinction matters because new technologies are easier for incumbents to build into their existing solutions. We have already seen this from Microsoft (copilot for everything), Adobe (Firefly) and many other large companies who recognize the opportunity (and threat) and are moving with surprising speed.

The implication: Startups will need to pick their spots carefully. We see several areas where new companies will have the advantage.

In many ways, it’s a marvel that application software has grown to be a $140 billion industry. Ask most users, from salespeople to doctors, and they’ll tell you it has not lived up to the promise, and is often increasing their workloads. That’s because most applications are “dumb” — they map imperfectly to workflows, have no context and require too much work from users. Generative AI has the potential to change that across a broad range of industries.

AI or “copilot” software will massively increase the productivity of a significant portion of the more than one billion knowledge workers worldwide performing general tasks like writing documents, taking notes and performing calculations. This spans front office (sales, marketing), back office (finance, HR) and other functions (IT service management, engineering). AI-powered assistants will vastly improve the customer experience across e-commerce, social media, fintech, on-demand delivery and healthcare, transforming the customer support industry that today employs about 17 million contact center agents. Everyone will become a creator, thanks to LLMs, text-to-image and text-to-video models, transforming industries such as movie production, marketing copywriting and advertising.

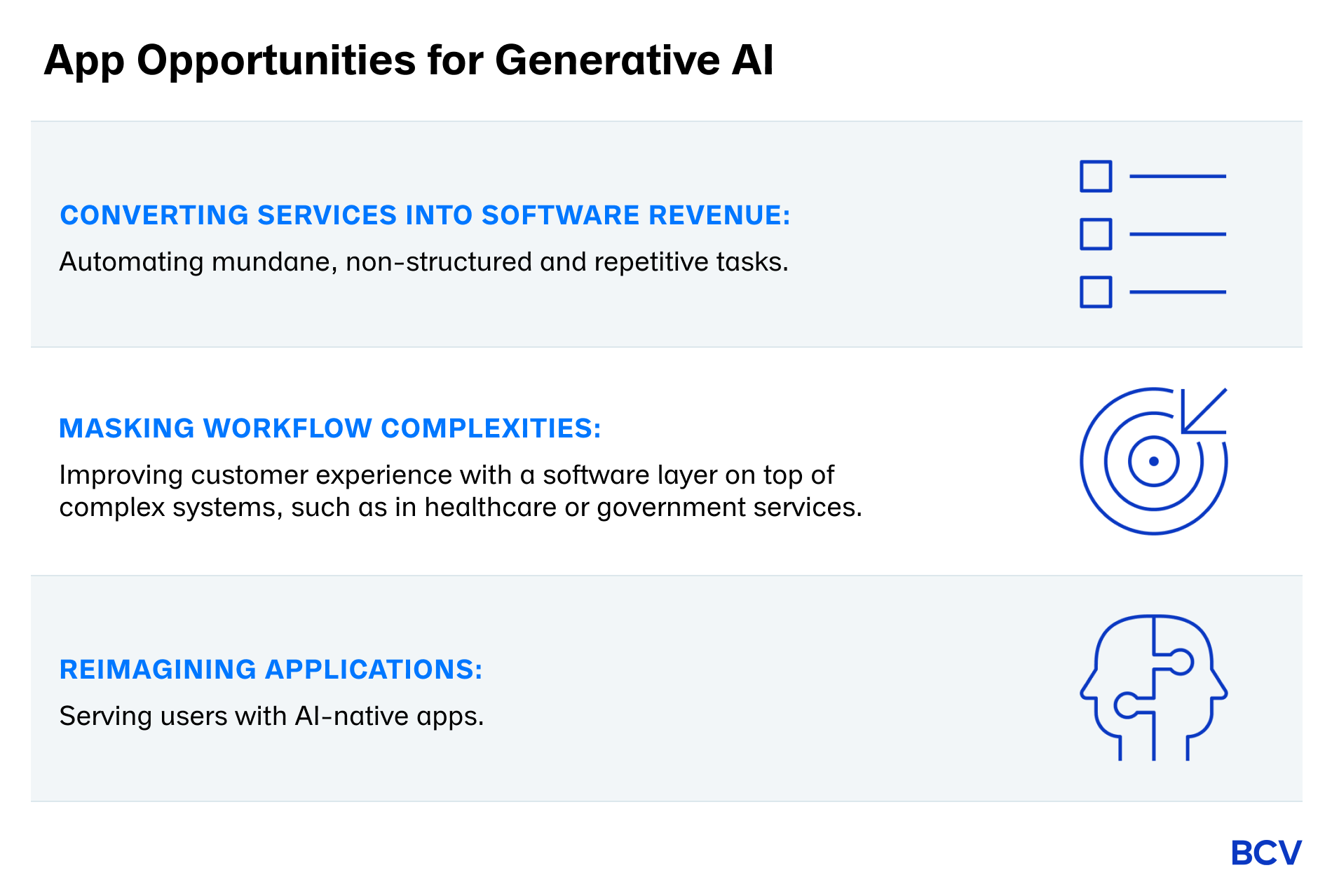

Most of the value created from these obvious opportunities will accrue to incumbents that are already embedded into customer workflows. New companies will form around non-obvious ideas that require both imagination and technical ability beyond most companies, which remain focused retrofitting their existing (often strong) businesses with generative AI. We see three main kinds of opportunity for these new companies:

Greenfield opportunities, converting services into software revenue

There are some activities that were previously beyond the reach of software that can now be automated. These are semi-structured repetitive tasks — one founder described them to us as “mundane decisions” — that historically needed just enough human judgment to put them out of reach of deterministic, analytical AI. There are countless examples: software development, legal work, insurance claim processing, anything done by operations teams. The world is awash in mundane decisions that can now be automated. Some companies doing this work include EvenUp, Harvey.ai, Maven and Runway ML.

Masking complexity in workflows that cannot change

It’s easy to reimagine large parts of our economy, such as healthcare or government services, but it’s very hard for them to actually change. With generative AI, it’s now possible to put a layer of software on top of the inefficiencies to vastly improve customer experience. Medical billing, virtual therapy, government procurement, planning approval — the possibilities are endless. These opportunities have the potential to be disruptive to incumbents. They form a layer of abstraction on top of underlying systems, rather than challenge them directly.

Radical reimagining of what an “application” should be

In productivity software, it took years of iteration before we evolved from Word to Notion, a radically different product. LLMs are a much more profound shift in that they change how we interact with software. The chat interface is a familiar start (think designing “a faster horse” or filming radio shows), but what’s possible if apps were truly built in an AI-native way?

Like code, models are complicated and costly to run. Infrastructure tools not only address these issues, but also make models more powerful by chaining them together and improving model accuracy. This, in turn, enables AI to address more complex use cases.

Much attention has been paid to middleware that connects models, such as Langchain and AutoGPT, and to vector databases, like Pinecone, Weaviate and Chroma. But there are a number of equally promising areas that have not attracted as much attention:

Unstructured data management

The data most useful in a workflow is often the hardest to capture. In real time, inboxes get flooded, Slack messages are traded and meaningful knowledge is lost in a chaotic roulade of logs and events. What if we could harness that data and use it downstream? The result would be transformative for both enterprise and consumer applications. The topology of data would differ depending on the use case, from CAD file ingestion for structural engineers to fuzzy satellite images for naval intelligence, to PDFs for investment banks. Extracting, curating and feeding this data to models via few-shot prompts or vector databases unlocks domain specificity regardless of the model or vertical. Some of the companies in this area include Unstructured, Cleanlab, Llama Index, Metal, Hybrid Index and Vectara.

Agent-driven automation

Incumbents today are focused on integrating surface-level chat interfaces into their existing products and expecting users to know how and when to interrupt their workflow to use a chatbot. We’re skeptical that chat is a universal modality. The Toolformer paper and ensuing frameworks like AutoGPT and BabyAGI have proven language models’ abilities to perform chain-of-thought reasoning and execute workflows by focusing on subtasks. In production settings, though, agents are not suitable for much more than enthralling Twitter threads. They collapse under the weight of recursive hallucination, incur outsized compute costs from repetitive calls to an LLM endpoint and lack memory to learn from prior executions. At the risk of egregious anthropomorphism, we believe future agent frameworks will behave as present humans do: with collaboration, cost, memory and utility in mind. One way to achieve this is with multiple agents that each have a different goal or cost function, and then having an orchestration layer assign tasks to each agent based on the prompt. AutoGPT, BabyAGI and Langchain Agents have dominated the conversation around agent frameworks thus far, and we’re excited to watch the next evolution of the space.

Evaluation

Most LLM-based apps we’ve seen in production today chain together various open-source and hosted models, leading to two issues: model selection and model experimentation.

It’s dangerous to make predictions when the landscape shifts on a weekly basis. But our current best guess is that three kinds of companies will be on the leading edge of generative AI in the enterprise.

Foundation model providers like OpenAI and Anthropic

These will partner with enterprises directly and sell through hyper-scalers (Azure/OpenAI, Oracle/Cohere, Google/Bard) and partnerships with consulting firms. They will provide foundation models (Claude 5 or GPT-7) with enterprise functionality (running in the customer’s VPC), and create an ecosystem of prompt engineers around themselves (much as Salesforce has done with app administrators and consultants). Open-source models will continue to proliferate throughout the enterprise, offering Databricks/MosaicML, Together and others an opportunity to capture the training and serving stack. The “OpenAI has no moat” leak out of Google and Sam Altman’s proclamation that the large model race is drawing to a close suggest that models will compete not on size, but rather on specific ergonomic qualities that help them outperform in specific situations (e.g. Med-PaLM 2 on PubMedQA).

Domain specific models — full stack applications that have trained their own models

The need for these exist because of limited context windows, the existence of proprietary information that’s not easily absorbed into the large foundation models and the emergent capabilities of large models. For example, new code generation models could take advantage of emergent capabilities in inferring cloud architecture and code performance characteristics. These are emergent because the model could be trained only on Git data, not architecture schematics or code profiling charts. The trade-off is that the model gives up creativity — you can’t get code profiling summaries the way Shakespeare would have delivered them, but the model would still produce preferable results from a developer’s perspective.

Platforms for building autonomous agents

For these, the foundation model is not the atomic unit, as people gravitate towards more complex systems with multiple integrated components:

These agents can act like an infinite army of researchers or “chiefs of staff” or really smart interns. They can be trained on a company’s tribal knowledge and kept recent/current through retrieval systems (feedback loop through localized OpenAI plugins within the company), and automate the many company-specific workflows that are too niche to support commercial products.

Founders building generative products and infrastructure have a unique opportunity to build generational companies, but have to choose their arena carefully. If you’re thinking about building in one of these areas, or think there are areas we haven’t explored that are standout categories, drop us a line. We’d love to hear from you. Reach us at aaref@baincapital.com and rgarg@baincapital.com.

Thanks to Slater Stich, Saanya Ojha and Sam Crowder for their contributions to this piece.

Cube is the standard for providing semantic consistency to LLMs, and we are investing in a new $25M financing after leading the seed round in 2020.

In this edition of “In the Lab,” Amit Aggarwal explains why he’s building an AI startup in BCV Labs after selling his company The Yes to Pinterest.

For now, most GenAI startups are focused on completing paperwork and are built on prompts. That may change in the months ahead.